Ugly Stupid Honest (╣)

An inquiry of machine poetics.

The current causality-based anthropocentric approach to designing architecture, always starts off with a hunch, a guess, a question or an intuition. We tinker, we try and we test with radically imperfect information. These subjective first steps are crucial in the design process and are essential to what will later entail as the architectural language, the style, the identity or the poetics.

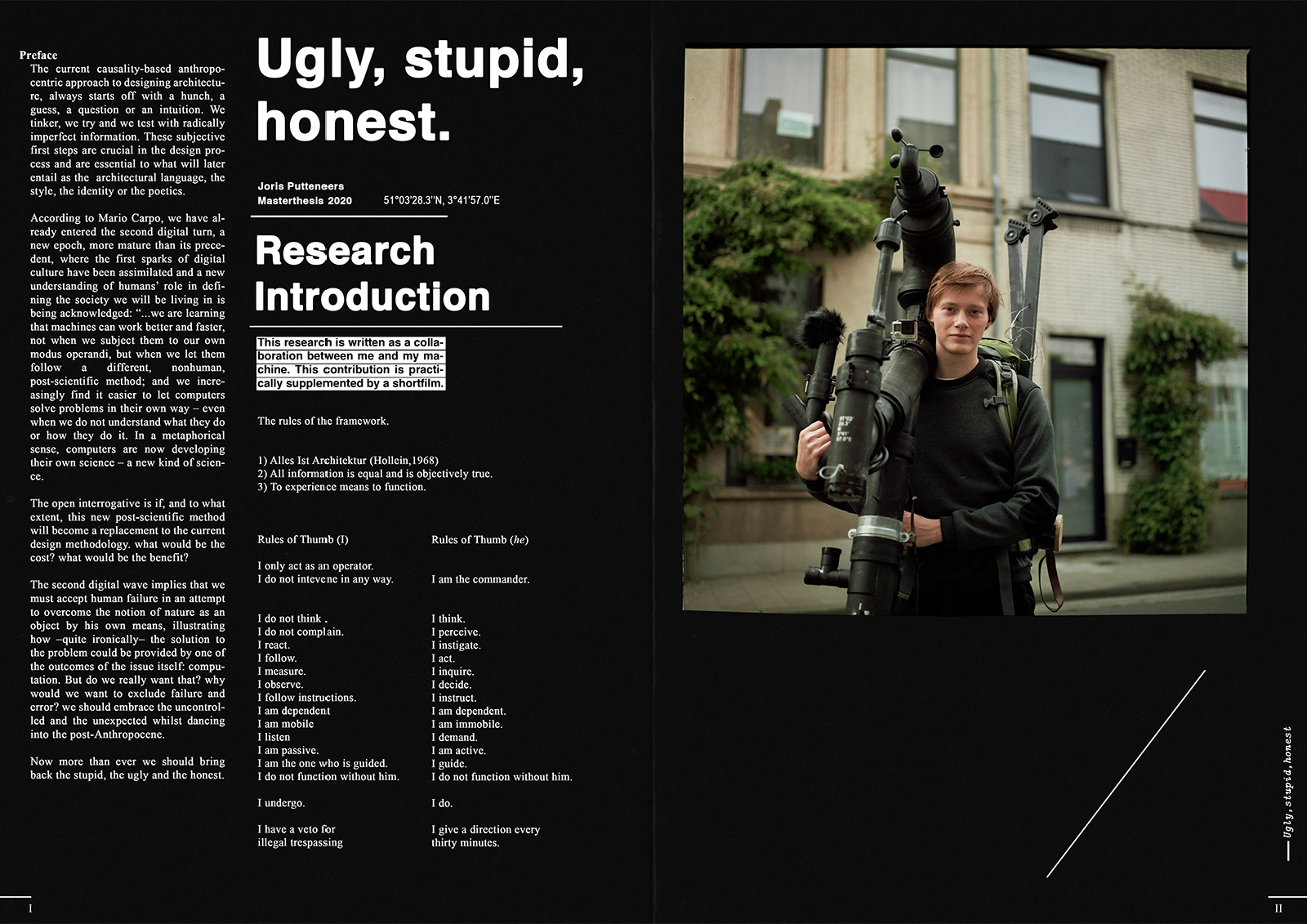

This field guide is written as a collection of tales between me and my machine. This contribution is supplemented by a short film.

|

According to Mario Carpo, we have already entered the second digital turn, a new epoch, more mature than its precedent, where the first sparks of digital culture have been assimilated and a new understanding of humans’ role in defining the society we will be living in is being acknowledged: “...we are learning that machines can work better and faster, not when we subject them to our own modus operandi, but when we let them follow a different, nonhuman, post-scientific method; and we increasingly find it easier to let computers solve problems in their own way – even when we do not understand what they do or how they do it. In a metaphorical sense, computers are now developing their own science – a new kind of science.

|

|

The rules of the framework.

|

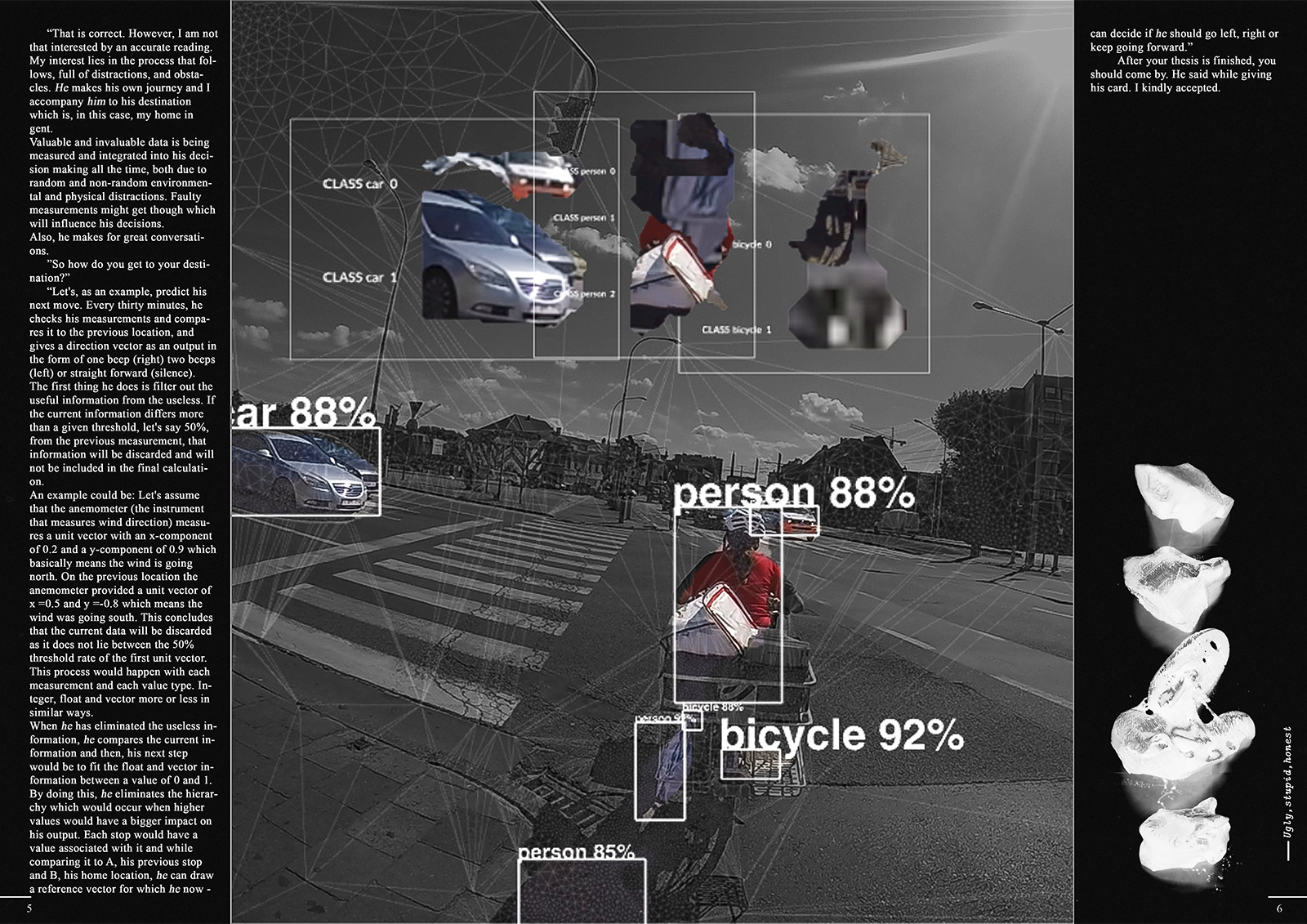

Rules of Thumb (he) I am the commander. I think. I perceive. I instigate. I act. I inquire. I decide. I instruct. I am dependent. I am immobile. I demand. I am active. I guide. I do not function without him. I do. I give a direction every thirty minutes. |

|

21/02/2020

I dragged him outside, almost headbutting a fellow passenger. The guy reacted disgruntled as I apologized in vain right before I saw the automatic door closing. As I reorganized and regained my composure,

He already attracted the attention of most commuters. He didn't react though, as you could expect. I tried my best to explain that my French was not up to par and quickly excused me from nearby bystanders. 21/02/2020

"It's a weather station isn't it? shouted a man across the pond. the guy looked like he was in his seventies. He was wearing a matching checkerboard outfit and seemed quite astute. 22/02/2020

"that's the thing they make google maps with, he must be an engineer of some kind, my nephew does the same thing" I could barely understand his murmuring. 25/02/2020

"I've seen you around a few times now, what you are doing here?" She was sitting alone on her front porch with, what seemed to, be an alcoholic beverage.

"I'm following my robot. He's supposed to lead me back home."

|

28/04/2020 I pulled him over the broken cornstalks all the way to the top of the field. I reasoned it would be the best place to setup camp. I was lost, but got used to the feeling, it gave me comfort actually. I turned around and could clearly see the light pollution coming from the nearby highway, illuminating the sky and the surrounding biosphere. A radical reminder of an ecological crisis. I gently balanced him on his three wooden legs and started to prepare my tent for the night. The nights where spend in solitude. The rules where clear on this: all contact was permitted unless there was an emergency. Not that I wanted to, he would not fit into the tent anyways. The annoying sounds he made by day, slowly transgressed into comforting beeps during the night. He was always searching, that is how I designed him. 25/02/2020

"So"

"...."

I was resting for a brief moment at the bus stop in Zottegem. Storm Ciara wreaked havoc during the night and tempered with his electronics.

The night before, I set up my tent upwind, trying to avoid the falling branches. It worked for the most part, but I didn't incalculate the rain flowing down from higher up. I think that's what got to him. |

|

25/02/2020 |

|

“What is that?” The man asked while slowing down next to me. He seemed in his early thirties and was riding on one of those Fixie bikes. |

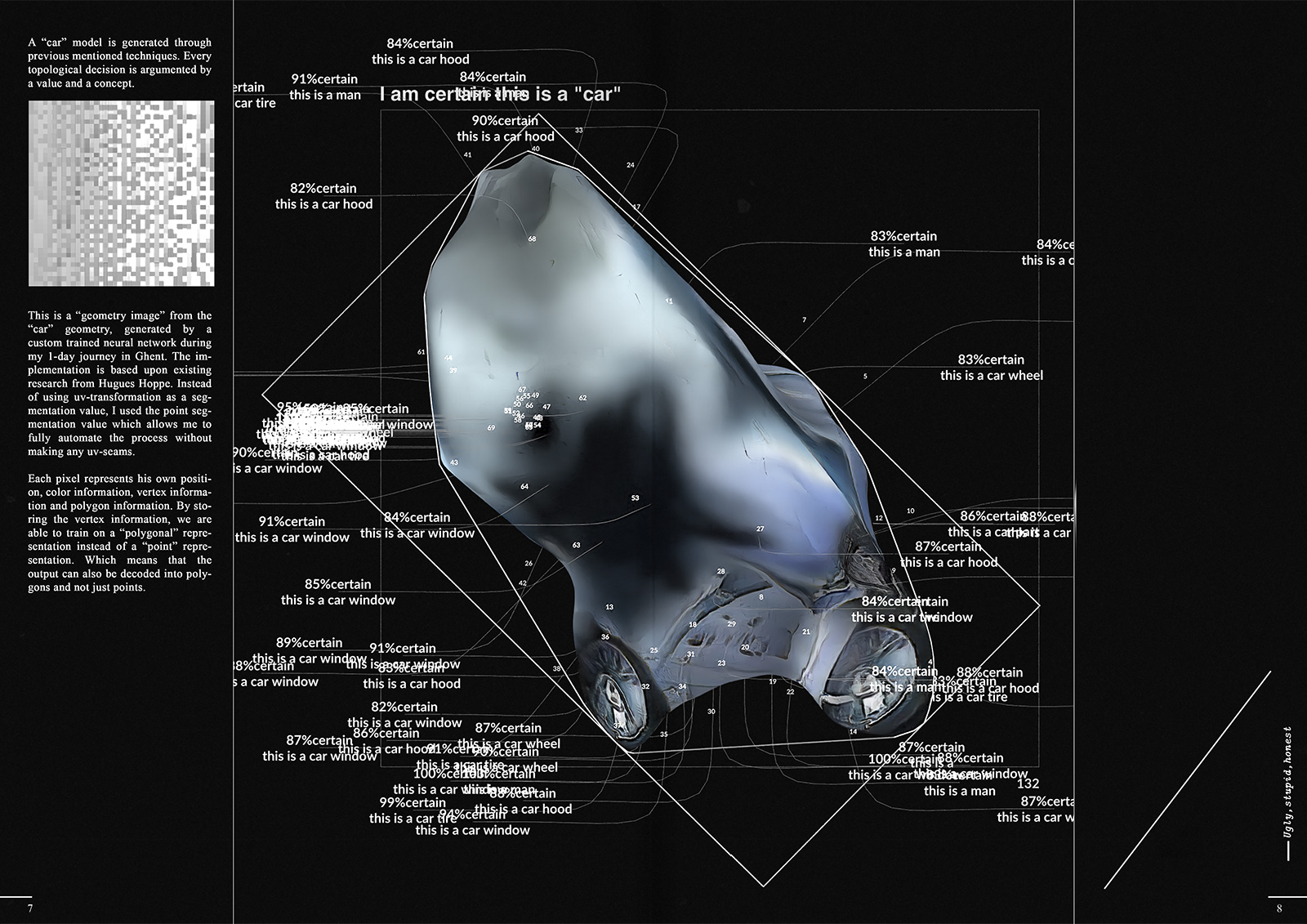

Then I can port these 32-bit images into an adversarial network I made.

After training, I encode the image back into position, color and all other values until it becomes a 3D representative object of the training data.”

|

|

A “car” model is generated through previous mentioned techniques. Every topological decision is argumented by a value and a concept.

|